Web Scraping Using BeautifulSoup and Visualization

Hi folks,

This is a software developer launching very exciting content about data science. Many know that data science is all about handling data by different techniques. Some of them doing by tools or some of them using different coding perspectives or even many do it manually, which is the worst way of doing this.

But before analyzing or visualizing data, you have to collect it from different sources. Many non-technical guys are collecting data by surveys which is alright to some extent let’s not go in that frame. But most of us like coders do it by writing some code lines and getting a huge amount of data from anywhere available online.

So, Today I am going to take you to some basic parts of coding in python which is very easy to follow and from where you can also grab all the data online from your domain interest.

For scraping data from any website. You need to choose any website from where you need to scrap information.

Note:- Some forms of web scraping are still illegal and risky to scrap data from any site. If they find any malicious activity on their website or your fetching data request is high which can affect the server response then they can take action on you or might block your IP for their website. So be careful and do not scrap any intimate data or continuously at a time without getting permission from the website owner.

To Know More About Web Scraping...

Choose any editor of your choice, if I recommend then go with google colab.

This is a very highly configurable editor google provides which is used for data analysis and visualization, even many industry experts also use this tool for their work.

First import all the required libraries in this colab.

These commands will download all these libraries and now you can better go with this to use them.

Requests → allow you to send HTTP requests.

beautiful soup → for pulling data out of HTML and XML files.

pandas → data analysis and manipulation tool.

re → regular expression specifies a set of strings that matches it.

matplotlib → for 2D plots of arrays.

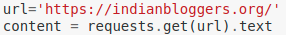

Then, create a variable url that contains the website link you want to scrap, inside it and with the help of the request package get all text content of that website in your content variable. So after printing content, it will look like this,

This is the content you will get the HTML code in the form of a string of this website.

Then,

Now BeautifulSoup contains the various format for parsing this HTML string. Like,

-> Python's HTML parser

-> lxml's HTML parser

-> lxml's XML parser

-> html5lib

So, here we are using Python's HTML parser because it gives a very clean look and easy to understand.

Now, parse content in Html using Beautiful soup.

Python's HTML parser

To fetch all anchor(<a>) tag inside this HTML doc. Use the find_all method to do that.

This find_all will give you a list of all <a> tags inside this HTML doc.

Create a dict variable where you are going to store all links and titles from that site.

Now looping on all anchor tag and checking if the link text is greater than 1 and anchor starts with HTTP and neglecting links which contain keywords which link u don’t want to extract.

Then store link text in dictionary text variable and link inside links variable.

Then change that information in a table format by using pandas library and set the index to title and store that table in a variable blog_list.

Now you can check this table by print the (blog_list) syntax and store in a CSV file so that you can use it outside the colab by following the below code.

By this, the bloggers.csv file will be created on the same path as your project file is created.

That’s all for scraping any website data very easily. There will be some challenges in logic for different websites but the basic concept behind everything is that only.

Now it's time to visualize data according to the categories, that the blog is published on which platform and that we can find by checking in their blogs URLs.

For example => " http://hawkeyeview.blogspot.in/ " by seeing this link we can say that hawkeyeview blog is written on Blogspot domain platform.

In this code above, we are searching in all anchor tags and storing the count of each category in dict form inside the "cat" variable.

So you will get the "cat" like this having count with their category.

Now in the above code, I am plotting a bar graph of a count of these categories using matplotlib.

Comments

Post a Comment